You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

DirectAdmin 1.662

- Thread starter fln

- Start date

- Joined

- Aug 30, 2021

- Messages

- 1,168

@sparek, this is control flag for an upcoming feature that will be released with DA 1.663. System info will show disk usage information. Sneak peek:

Code:

# curl -s $(da api-url)/api/system-info/fs | jq .

[

{

"device": "/dev/vda1",

"mountPoint": "/",

"fileSystem": "ext4",

"totalBytes": 82944933888,

"availableBytes": 16258412544,

"reservedBytes": 4260466688,

"usedBytes": 62426054656

}

]Richard G

Verified User

Great! Will that show in bytes as shown? Or will MB or GB be used in the GUI?show disk usage information

unihostbrasil

Verified User

@fln - There is a file permissions issue with this version - The files /usr/local/directadmin/data/users/*/domains/domain.com.key are being changed to owner "root". The /usr/local/directadmin/scripts/set_permissions.sh script corrects this (for the "mail" user/group), however, with each panel update (even hotfix) the permission returns to root.

@petersconsult - Maybe your problem is related to this. We also had a problem for this reason.

@petersconsult - Maybe your problem is related to this. We also had a problem for this reason.

- Joined

- Aug 30, 2021

- Messages

- 1,168

@unihostbrasil, latest release (compared to earlier DirectAdmin versions) started using

All user owned domain certificates in

Could it be that DirectAdmin is configured to use single domain certificate as server hostname certificate in

root:root ownership for the server certificate files:cakey=/usr/local/directadmin/conf/cakey.pemcacert=/usr/local/directadmin/conf/cacert.pemcarootcert=/usr/local/directadmin/conf/carootcert.pem

All user owned domain certificates in

/usr/local/directadmin/data/users/*/domains/domain.com.key used to have and continues to have diradmin:access ownership.Could it be that DirectAdmin is configured to use single domain certificate as server hostname certificate in

directadmin.com?unihostbrasil

Verified User

@unihostbrasil, latest release (compared to earlier DirectAdmin versions) started usingroot:rootownership for the server certificate files:

These TLS certificates and key are only used by the DirectAdmin service (for server name certificate). When CustomBuild copies these certificates to other services like apache, nginx, exim,... it changes the permissions as needed.

cakey=/usr/local/directadmin/conf/cakey.pemcacert=/usr/local/directadmin/conf/cacert.pemcarootcert=/usr/local/directadmin/conf/carootcert.pem

All user owned domain certificates in/usr/local/directadmin/data/users/*/domains/domain.com.keyused to have and continues to havediradmin:accessownership.

Could it be that DirectAdmin is configured to use single domain certificate as server hostname certificate indirectadmin.com?

In our DA configuration, we point to the certificate of a specific domain, not the hostname certificate. When using custom cakey/cacert/carootcert settings, DA improperly changed the permissions of these files to root:root. As these certificate files are also used by other services (exim, dovecot, ...), services that use these files had problems.

Would it be possible to revert this change? And always document all DA changes (main updates and hotfixes)? This makes server administration much easier and helps identify post-update problems.

Thanks

- Joined

- Aug 30, 2021

- Messages

- 1,168

From DirectAdmin perspective this is a miss configuration (pointing server cert to ./data/users/...). Server host name certificate is managed separately from the domain certificates and such configuration creates a nasty circular dependency. For example when it is time to renew the certificate it would renew it twice.

When DirectAdmin is accessed from a user owned domain it will automatically use a certificate for that particular domain (from the ./data/users/... directory). The certificate configured in

The issue can be fixed by separating server host certificate files from user owned domain files (by restoring default file locations in

Would you give us some details on what was the intended goal of such configuration?

When DirectAdmin is accessed from a user owned domain it will automatically use a certificate for that particular domain (from the ./data/users/... directory). The certificate configured in

directadmin.conf should be for server host name.The issue can be fixed by separating server host certificate files from user owned domain files (by restoring default file locations in

directadmin.conf).Would you give us some details on what was the intended goal of such configuration?

If I remember correctly, before, when restoring an account, and the domain has SPF already, DA will create another SPF record. Now, if I put a custom SPF to /usr/local/directadmin/data/templates/custom, the value there is always overwriting the existing SPF value in backup file.

The good thing is that domain has only one SPF record. However, if the domain has custom SPF too, for example using Office365, the SPF record will be overwritten.

Is there any way to check and merge SPF values, for example?

The good thing is that domain has only one SPF record. However, if the domain has custom SPF too, for example using Office365, the SPF record will be overwritten.

Is there any way to check and merge SPF values, for example?

petersconsult

Verified User

- Joined

- Sep 10, 2021

- Messages

- 79

Thank You @unihostbrasil !!

i think that this is definitely an avenue to explore for me!

i usually create a custom server certificate using the following script (domain names edited):

Running this script gets rid if the SSL errors for me..

EDIT: i'll try changing the permissions to

However, i feel it's important to note that the SSL errors appeared (only when sending email) *before* i ran the script, and disappeared *after* i ran my script..

i think that this is definitely an avenue to explore for me!

i usually create a custom server certificate using the following script (domain names edited):

Code:

#!/bin/bash

## watch out! added 'domain1.tld', 'mail.domain1.tld'

/usr/local/directadmin/scripts/letsencrypt.sh request 'host.maindomain.tld','webmail.maindomain.tld','mail.maindomain.tld','maindomain.tld','www.maindomain.tld','mail.domain2.tld','domain2.tld','mail.domain3.tld','domain3.tld','mail.domain1.tld','domain1.tld' 4096;

# wait until installed, then run:

sleep 300;

\cp /etc/httpd/conf/ssl.key/server.key /usr/local/directadmin/conf/cakey.pem;

\cp /etc/httpd/conf/ssl.crt/server.crt /usr/local/directadmin/conf/cacert.pem;

\cp /etc/httpd/conf/ssl.crt/server.ca /usr/local/directadmin/conf/carootcert.pem;

\cp /etc/httpd/conf/ssl.crt/server.crt.combined /usr/local/directadmin/conf/cacert.pem.combined;

chown diradmin:access /usr/local/directadmin/conf/ca*;

# wait;

sleep 10;

/usr/local/directadmin/custombuild/build litespeed;

# wait;

sleep 60;

killall lsphp;

# wait;

sleep 30;

/usr/local/directadmin/custombuild/build rewrite_confs;

# wait;

sleep 30;

service directadmin restart;Running this script gets rid if the SSL errors for me..

EDIT: i'll try changing the permissions to

root:root of the cert files in /usr/local/directadmin/conf/ in my script this weekend..However, i feel it's important to note that the SSL errors appeared (only when sending email) *before* i ran the script, and disappeared *after* i ran my script..

Last edited:

Is email limit not working as expected? I tested by setting up an account with daily sending limit is 2. Then sending out an email with 4 recipients. DA does count, and generates a notification that I have just finished sending 2 E-Mails immediately. However, all 4 recipients could still receive the email. I assumed that when DA recognizes the limitation, it should stop and will not continue the sending, but it seems still to allow the sending finished.

Is this a bug?

Is this a bug?

Hello,

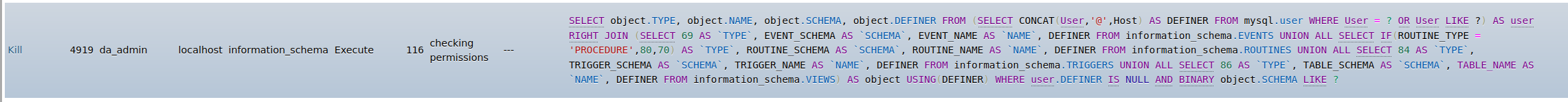

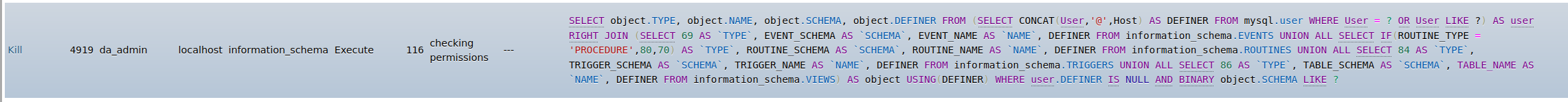

Since this update we are having issues with database management on some of our servers. When the user access his database management in directadmin it takes a couple of minutes to load the databases. In phpmyadmin we see the following process during this time:

This only happens on our servers that run mysql5.6, other servers with mysql5.7 and mysql8 don't have this issue.

These servers run on cloudlinux with mysql governor updated to it's latest version.

Any idea why this is happening?

Regards!

Since this update we are having issues with database management on some of our servers. When the user access his database management in directadmin it takes a couple of minutes to load the databases. In phpmyadmin we see the following process during this time:

This only happens on our servers that run mysql5.6, other servers with mysql5.7 and mysql8 don't have this issue.

These servers run on cloudlinux with mysql governor updated to it's latest version.

Any idea why this is happening?

Regards!

Hello,

The same issues is happening when using installatron in directadmin, it takes ages for a page to load and the same processes get stuck in mysql.

What has changed in directadmin that's causing these issues with mysql5.6? This is a serious bug and causing a lot of problems.

Regards

The same issues is happening when using installatron in directadmin, it takes ages for a page to load and the same processes get stuck in mysql.

What has changed in directadmin that's causing these issues with mysql5.6? This is a serious bug and causing a lot of problems.

Regards

All releases

| Release | Release date | End of life | |

|---|---|---|---|

| MySQL 8.3 | January 16, 2024 | April, 2024 | |

| MySQL 8.2 | October 25, 2023 | January, 2024 | |

| MySQL 8.1 | June 21, 2023 | October 25, 2023 | |

| MySQL 8.0 (LTS) | April 19, 2018 | April, 2026 | |

| MySQL 5.7 | October 21, 2015 | October 21, 2023 | |

| MySQL 5.6 | February 5, 2013 | February 5, 2021 | |

| MySQL 5.5 | December 3, 2010 | December 3, 2018 | |

| MySQL 5.1 | November 14, 2008 | December 31, 2013 | |

| MySQL 5.0 | January 9, 2012 |

Mysql on these servers is managed through cloudlinux, not directadmin.All releases

Release Release date End of life MySQL 8.3 January 16, 2024 April, 2024 MySQL 8.2 October 25, 2023 January, 2024 MySQL 8.1 June 21, 2023 October 25, 2023 MySQL 8.0 (LTS) April 19, 2018 April, 2026 MySQL 5.7 October 21, 2015 October 21, 2023 MySQL 5.6 February 5, 2013 February 5, 2021 MySQL 5.5 December 3, 2010 December 3, 2018 MySQL 5.1 November 14, 2008 December 31, 2013 MySQL 5.0 January 9, 2012

- Joined

- Aug 30, 2021

- Messages

- 1,168

@ViAdCk, try upgrading to DA 1.663. This version has new option to skip database size checking. It helps speedup database list load times for users with a lot of databases. This version also has internal optimizations to load DB list faster.

If you still have problems please open a support ticket. It could be specific to your server configuration.

If you still have problems please open a support ticket. It could be specific to your server configuration.

Thanks for your answer, I have updated to 1.663 but the same issues persist. I will open a ticket.@ViAdCk, try upgrading to DA 1.663. This version has new option to skip database size checking. It helps speedup database list load times for users with a lot of databases. This version also has internal optimizations to load DB list faster.

If you still have problems please open a support ticket. It could be specific to your server configuration.

ItsOnlyMe

Verified User

Has this ever been fixed anyways? I noticed that our servers are throwing this error now in a lot of our plugins;. What has been changed to login_keys? I noticed this issue in June but reverted to a older da version since there is off course nothing in the change logs that something is changed with this. This server updated now to 1667 and cant revert anymore. So what do we do to fix this issue with login keys?@Access_Denied, this is a different issue. All other CMD_.. API endpoints are still processed by the old engine, only the databases management related endpoints are transferred.

Could you please open a ticket so we could get more information about the way you use API?

- Joined

- Aug 30, 2021

- Messages

- 1,168

@ItsOnlyMe, there were some changes to make DA more backwards compatible with incorrect API usage. If the problem still persists in DA 1.667 it should be something different. We could investigate this further but we need access to a machine where we could investigate what causes such problems. It is likely that the plugins were using API in a way that we consider incorrect. If we knew exact usage pattern we could then decide to either support such usage pattern or give hints on how to change API client to stay compatible.

ItsOnlyMe

Verified User

@ItsOnlyMe, there were some changes to make DA more backwards compatible with incorrect API usage. If the problem still persists in DA 1.667 it should be something different. We could investigate this further but we need access to a machine where we could investigate what causes such problems. It is likely that the plugins were using API in a way that we consider incorrect. If we knew exact usage pattern we could then decide to either support such usage pattern or give hints on how to change API client to stay compatible.

I can not give access to our servers. Please provide a example of a working and correct API usage with the httpsocket.php.

We use it like this: We have a helper class that calls httpsocket:

File = DaHelper.php:

PHP:

<?php

require 'httpsocket.php';

class DaHelper

{

private $_socket;

public function __construct($user, $pass = "") {

$this->_socket = new HTTPSocket();

$this->_socket->connect('ssl://'.gethostname(), 2222);

if (empty($pass) === true) {

$this->_socket->set_login($user);

} else {

$this->_socket->set_login($user, $pass);

}

}

public function callApi($command, $args = array(), $raw = false) {

$this->_socket->set_method('GET');

$this->_socket->query('/'.$command, $args);

if ($raw === true) {

$result = $this->_socket->fetch_body();

} else {

$result = $this->_socket->fetch_parsed_body();

}

return $result;

}code from our plugin where $username is $_SERVER['USERNAME']:

PHP:

<?php

require_once("DaHelper.php");

$dh = new DaHelper("admin|$username", "PASSWORD FROM LOGINKEY HERE");

$dau = $dh->callApi('CMD_API_ADDITIONAL_DOMAINS', ['user' => $username]);The error gets thrown on $dau and gives the error about auth, in the logs I see that it tried to login with a invalid password. I also can not login to the interface if I add my IP address to the allowed IP's for this login_key. It will also then say invalid password.